👔 유명한 fashionMNIST Classifier에 Convolution과 Pooling을 적용한 후 성능 증가를 직접 경험해보았다. 이제 다양한 model tuning과 epochs를 조절하면서 소폭의 성능 증가에 기여할 수 있는 지 알아보도록 해보자!

👔 모든 코드는 GitHub 클릭!

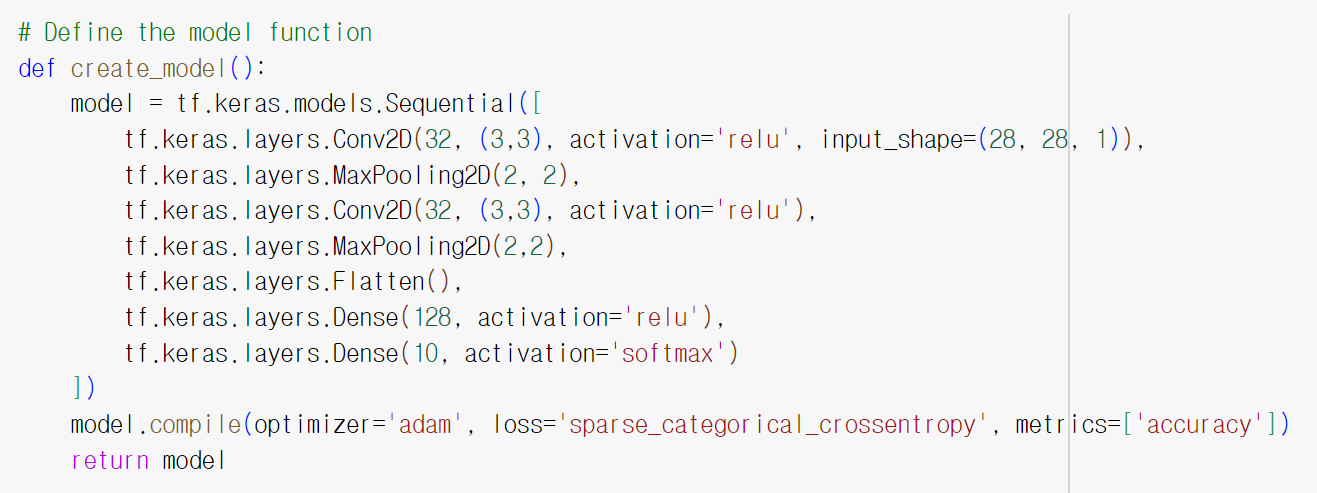

👔 default fashionMNIST Classifier 모델: <Convolution((3,3) x 32) + MaxPooling(2,2)> 2개 연산 → Flatten() → Dense(128, relu) → Dense(10, softmax)

① Adjusting the Number of Epochs

👔 epochs란 주어진 batch_size에 맞는 여러 batch를 미리 만들고 난 후, 동일 batch training을 반복적으로 몇 번 수행하는 가이다. 예를 들어 5 epochs라면 동일 batches를 5번 수행하고, 10 epochs라면 동일 batches를 10번 training 수행한다. 즉 동일 dataset을 몇 번 학습하는 지, epochs의 수로 조정할 수 있다. epochs 수를 늘리면서 learning weight을 세부적으로 조정해가며 학습 성능을 높일 수 있다(batch는 고정이다. 예를 들어 4개의 데이터 1, 2, 3, 4가 이고 2개의 batches가 있다면 각각의 batch에 1, 3 그리고 2, 4 이렇게 있다면 epochs가 증가함에 따라 batches는 고정). 하지만 해당 training set에 과적합되어 학습할 수 있으므로 과도한 학습은 피해야 한다. 아래 성능의 결과로 이를 유추할 수 있다.

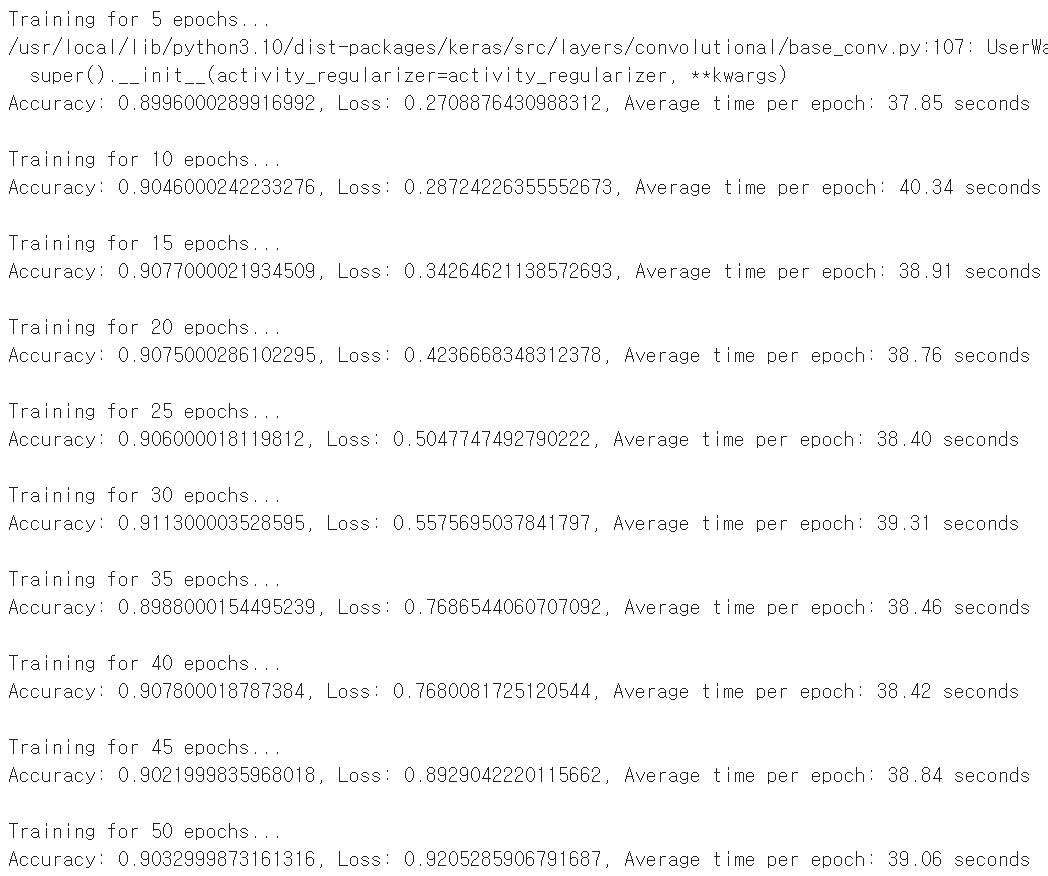

👔 Convolution과 Pooling을 적용한 fashionMNIST Classifier의 epochs를 조절하면서 accuracy in evaluation, loss in evaluation 그리고 average training time per epoch에 어떤 변화가 생기는 지 그래프로 시각화 해보자. epochs를 5부터 50까지 5단위로 증가하면서 3개 metrics의 변화를 살펴보았다.

① epochs가 증가할수록 accuracy 정확도의 확연한 증가는 없음을 알 수 있다. epochs = 5에서 epochs = 30으로 진행하면서 accuracy가 대체적으로 증가 추세를 보이다가 epochs = 30 일때 최댓값 0.9113을 찍었다. epochs=35 일때 accuracy 최솟값 0.8988

② 가장 낮은 accuracy를 보이는 epochs=35인 경우와 최댓값 accuracy를 보이는 epochs=30인 경우의 각각의 accuracy는 0.9113과 0.8988로 |max accuracy - min_accuracy|는 0.0125. 따라서 최댓값 accuracy 대비 전체 accuracy의 편차는 약 1.372%의 변동성에 그친다.

③ model loss의 경우 epochs가 증가함에 따라 loss값도 확연히 비례방향으로 증가하는 것을 볼 수 있다. model loss의 확연한 증가의 원인을 생각해볼 필요가 있다.

추정원인) ③-1 overfitting의 가능성

: 학습횟수 epochs를 계속 늘리다보면 training dataset에 최적화되어서 evaluation 과정에서 오히려 역효과를 낼 수 있다. 특히 해당 데이터셋에는 60000개의 데이터만 존재하므로 accuracy 값에는 큰 차이가 없지만, loss 값 같은 경우 prediction값과 실제값 차이를 극명히 보여주는 수치이기에, overfitting으로 인해 loss가 극명하게 증가하는 부분을 그래프를 통해서 알 수 있다.

추정원인) ③-2 high initial accuracy

: 처음에 어느정도 좋은 accuracy 성능을 보여주었다면, 이후 epochs를 증가시킨다 하더라도 오히려 training set에 최적화되어 test set과의 loss값은 극명하게 증가할 확률이 높다.

추정원인) ③-3 imbalanced dataset(하지만 해당 case에서 원인은 아님)

: classification task에서 (위 task는 fashionMINST classifier로 classification task이다) 애초에 imbalanced한 dataset을 놓고 진행한다면 accuracy와 다르게 loss는 증가하는 경향을 보이곤 한다. 하지만 이는 해당 task에서는 맞지 않다. 아래 코드 결과를 본다면, 각 class별 분포가 동일하게 되어 있다.

for num in set(training_labels):

print(list(training_labels).count(num)) #6000 each

④ epochs별 dataset training time은 큰 변화가 없다. epoch가 진행될 때마다 epoch별 모델이 학습하는 속도는, epoch 수와 상관이 없음을 유추할 수 있다.

👔 epochs 실험 결과: epochs 10에서 best performance. loss값은 계속 증가하는 상태에서 15일 때 epochs가 정점을 찍었다가 20부터 약간 감소한다. 하지만 epochs 15에서의 loss값은 0.3426으로 epoch 10에서의 loss값 0.2872보다 큰 폭으로 증가. 반면에 accuracy는 0.9046과 0.9077로 loss 변화폭에 비해 큰 차이가 없기 때문에 epochs 10을 best performance epochs로 설정. 이후 model tuning 실험에서 epochs를 모두 10으로 설정하고 진행.

coursera <intro to Tensorflow for AI, ML and DL>

'Deep Learning > Experiments' 카테고리의 다른 글

| 👔Improving fashionMNIST Classifier using Convolutions (1) | 2024.06.02 |

|---|---|

| 👔 fashionMNIST Classifier (0) | 2024.01.15 |

댓글