👏 저번 시간에 coefficient 두 종류 Pearson과 Spearman에 대해서 공부했었다!

👏 correlation 관련 수학 & 선대 기초 개념 span, basis, rank 그리고 응용한 projection 개념까지 이번 포스팅으로 간단히 알아보ZA

Pearson & Spearman correlation coefficients

🧓🏻 데이터분석에 있어서 꼭 알고 넘어가야 할 개념인 두 coefficients 종류 Pearson과 Spearman에 대해 자세히 알아보자 ≫ 저번 coursera 강좌 posting에서 아주 잠깐 배웠던 적이 있었다 🏄🏻 coefficient

sh-avid-learner.tistory.com

👏 모든 data를 좌표공간의 vector상으로 나타낼 수 있다. 또 여러 종류의 data, 즉 여러 개의 vector가 모이면 matrix 형태로 표현이 가능!

Scalar & Vector & Matrix (fundamentals)

▶ Linear Algebra 하면? 당연히 알아야 할 기본은 'Scalar(스칼라)' & 'Vector(벡터)' & 'Matrix(행렬)' ◀ 1. Scalar * concepts = "단순히 변수로 저장되어 있는 숫자" → vector 혹은 matrices에 곱해지는..

sh-avid-learner.tistory.com

1> span

🌞 span - '주어진 두 벡터의 (합이나 차와 같은) 조합으로 만들 수 있는 모든 가능한 벡터의 집합'

🌞 correlation posting에서 서로 두 변수가 correlation 관계가 아예 없음을 vector 상으로 두 vector가 직교함을 통해 표현할 수 있다고 하였다. 여기서 vector끼리의 관계를 크게 두 가지, 선형관계에 있다(linearly dependent) & 선형관계가 없다(linearly independent)로 표현할 수 있다.

🌞 1) 선형 관계에 있는 vector 집합> 이 때의 vector들의 span은 한 평면이 아닌, vector들이 겹쳐 올려져 있는 선으로 제한된다

🌞 2) 비선형관계에 있는 vector 집합> vector들은 선형적으로 독립되었다고 말하며 주어진 공간(2개의 벡터의 경우 $R^2$평면)의 모든 vector를 조합을 통해 만들어 낼 수 있다

2> basis

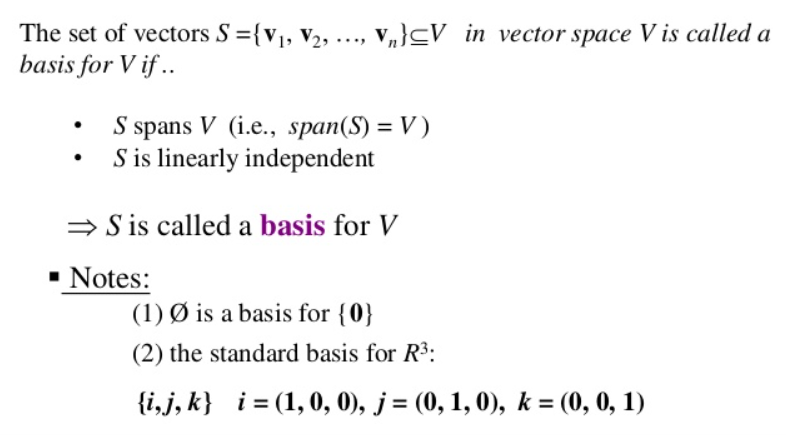

🌛 basis는 span의 역개념이다. V라는 vector space 벡터공간에 S set이라는 주어진 여러 vector들의 집합 - S가 존재하는데, 이 vector들(S set)이 V라는 벡터공간을 위한 basis를 만족하기 위해서는, 즉 S set이 V라는 vector space를 위한 basis가 될려면

🌛 ① S set이 V vector space 공간을 span(포괄) 해야 함 - 즉, vector space 내부 전체를 포괄해야 함

🌛 ② 그리고 S set이 선형적으로 독립이어야 함 (즉, S set 내 vector들이 서로 독립. 서로 같은 선 상에 있으면 안됨)

🌛 따라서, basis의 정의에 의해, 벡터 공간 V의 basis는 V라는 공간을 채울 수 있는 선형 관계에 있지 않은 벡터들의 모음이다

🌛 orthogonal basis>

→ basis에 추가로 orthogonal(수직)한 조건이 붙는, 주어진 공간을 채울 수 있는 서로 수직인 vector들을 말함

→ basis 내의 vector들이 서로 수직

🌛 orthonormal basis>

→ orthogonal basis에 추가로 normalized한 조건이 붙은 것으로, 길이가 1인 vector들을 말한다

→ basis 내의 vector들이 서로 수직 & vector length가 모두 1일 때

3> rank

🌼 matrix에 구성되는 vector들로 만들 수 있는 공간(span)의 차원을 rank라 한다

🌼 matrix의 차원 ≠ matrix의 rank

→ 이유는 matrix를 구성하는 vector들 중에 서로 linearly dependent한 관계도 있을 수 있기 때문이다!

🌼 gaussian elimination을 통해 matrix의 rank 확인 가능

🍁 gaussian elimination 🍁

🌼 주어진 matrix를 row-echelon form으로 바꾸는 계산과정

→ row-echelon form은 각 행에 대해서 왼쪽에 1, 그 이후 부분은 0으로 이뤄진 형태

→ 즉, 각 행의 시작부분이 '0의 나열 + 1'의 형태여야 함

→ row-echelon form은 일반적으로 upper-triangular 형태를 가진 matrix임

- row-echelon form형태로 바꾸는 계산과정 (하단) -

아래 예의 경우 맨 마지막 줄이 0으로만 되어 있어 해당 matrix P의 rank는 3이 아닌, 2이다!

🌼 재해석> 'matrix의 rank가 x이다' = '해당 matrix를 구성하는 모든 vector들을 통해 $R^x$ 공간만을 vector로 만들어낼 수 있다'는 뜻

w/code

★numpy.linalg.matrix_rank docu★

https://numpy.org/doc/stable/reference/generated/numpy.linalg.matrix_rank.html

from numpy.linalg import matrix_rank

linalg.matrix_rank(A, tol=None, hermitian=False)

→ 직접 parameter A에 rank를 구하고자 하는 matrix를 집어넣으면 원하는 rank가 나온다

4> linear projection>

🌸 (2차원 공간) vector w를 선 L로 투영된 결과로 나온 vector를 𝑝𝑟𝑜𝑗𝐿(𝑤)라고 한다 / 이 때 vector v를 선 L위의 단위 vector라고 하면,,

- 아래와 같이 표현 가능! -

🌸 for feature reduction

→ projection을 통해 여러 vector들을 한 line 선으로 내림으로써 data 저장 공간도 줄이고, data 복잡성도 줄이는 혜택들을 누릴 수 있다!

🌸 위 식을 w와 v의 곱에 대해 나열하면 projection된 벡터의 크기와 한 벡터의 크기의 곱으로 표현 가능

w/ dot product

🌸 ex) vector v [3, 1]에 vector w [-1, -2] 를 곱한 결과(dot product)는 vector v의 길이에 vector w를 v에 projection한(𝑝𝑟𝑜𝑗w(v)의 길이) 결과의 길이를 곱한 것과 같다

→ 만약 v와 w가 서로 다른 방향이라면 (-1)을 곱해주면 된다

🌸 아래 그림 참조

→ 즉, 다시 말하면 (벡터 a에 대한 벡터 b의 정사영 크기) * (벡터 a의 크기) = 벡터 a와 벡터 b의 dot product

w/code

Q. 임의의 vector v와 w가 있다고 할 때 벡터 v를 벡터 w에 projectiong한 결과

생긴 projected vector크기와 projected vector x와 y성분 각각을 출력하는 function을 만들자

def myProjection_norm(v, w):

#v를 w에 project

value = np.dot(v,w)/np.linalg.norm(w)

return value

def myProjection_coordinates(v, w):

#v를 w에 project

value = np.dot(v,w)/np.linalg.norm(w)

hat = w / np.linalg.norm(w)

return value*hat

* 썸네일 출처> https://ko.wikipedia.org/wiki/%EC%84%A0%ED%98%95%EB%8C%80%EC%88%98%ED%95%99

* 출처> w/dot product https://blog.naver.com/PostView.nhn?isHttpsRedirect=true&blogId=ao9364&logNo=221542210272

* 출처> linear projection https://www.youtube.com/watch?v=LyGKycYT2v0

'Math & Linear Algebra > Concepts' 카테고리의 다른 글

| Odds Ratio & log(Odds Ratio) (0) | 2022.07.11 |

|---|---|

| eigenvalue & eigenvector (0) | 2022.05.14 |

| Pearson & Spearman correlation coefficients (0) | 2022.05.13 |

| Cramer's Rule (+exercise) (0) | 2022.05.09 |

| Basic Derivative - 미분 기초 (0) | 2022.04.18 |

댓글