🌟 로지스틱 회귀 포스팅에서 MLE기법을 통해 model을 결정한다고 하였다. 로지스틱 회귀의 식을 더 deep하게 수학적으로 들어가, 어떤 모델을 고를 지 수식으로 연산하는 과정에서 MLE가 핵심으로 사용되는데, 이번 시간에는 MLE를 수학적인 개념으로 좀 더 자세하게(꽤 deep하게) 알아보고자 한다.

🌟 추가로 아래 그림에서 보듯이 추후 포스팅에서는 로지스틱 회귀와 MLE를 같이 연관시켜 알아보자!

* 정의 & concepts>

🌟 모수적인 데이터 밀도 추정방법으로, 파라미터 $\theta = (\theta_1, ... , \theta_m)$으로 구성된 어떤 확률밀도함수 $P(x|\theta)$에서 관측된 표본 데이터 집합을 $x = (x_1, x_2, ... , x_n)$이라 할 때, 이 표본들에서 파라미터 $\theta = (\theta_1, ... , \theta_m)$를 추정하는 방법

🌟 쉽게 말하면, 주어진 data 여러 x들이 있는데, 해당 x를 가장 잘 설명하는 최적의 distribution을 찾는 것 - (확률밀도함수 찾기 - 확률밀도함수 관련 parameter $\theta$ 찾기)

🌟 ex) 예를 들어 아래 그림과 같이 주어진 여러 x data 점들이 있고 해당 data 점들을 가장 잘 설명하는 최적의 'normal distribution'을 찾는다면 MLE 주황색 곡선을 찾을 수 있다. (식을 이용한 MLE 기법은 다음 포스팅에 자세히 설명 예정)

🌟 직관적으로 보자면, 특정 모수 parameter들의 집합으로 이루어진 특정 분포가 주어진 x를 가장 잘 설명하는 분포인지 알아보기 위해, 해당 x에서의 분포까지의 높이를 모든 x별로 다 계산해서 각각 곱한다. 이 곱한 결과를 'likelihood(가능도)'라 하며, 해당 likelihood가 최대가 되는 분포를 최종적으로 정한다.

🌟 식

① $L$ = $L_1$ x $L_2$ x $L_3$ x ... x $L_n$

(총 n개의 x data가 존재하고 각각의 likelihood를 모두 곱한 결과를 최종적인 likelihood라 하자.)

② $\prod_{i=1}^{N} L_{i}$ = $p(x_1|\hat{\theta})$ x $p(x_2|\hat{\theta})$ x $p(x_3|\hat{\theta})$ x ... x $p(x_N|\hat{\theta})$

(총 likelihood는 각 x별 모수 $\theta$ 모음을 갖고 있는 분포 - 확률밀도함수값을 모두 곱한 것임을 뜻한다.)

③ $L$ = $\prod_{i=1}^{N} p(x_i|\hat{\theta})$

(즉, 정리하면 위와 같이 정리할 수 있다.)

④ $lnL$ = $\sum_{i=1}^{N} lnp(x_i|\hat{\theta})$

(이후 미분의 편의성을 위해 양변에 log를 붙여보자 - log likelihood function)

⑤ $\frac{\partial}{\partial \theta}$ $lnL(\theta|x)$ = $\sum_{i=1}^{N}$ $\frac{\partial}{\partial \theta}$ $lnp(x_i|\hat{\theta})$ = 0

(분포 모수 $\theta$에 대해서 편미분을 하고, 미분결과값이 0일 때의 $\theta$ 값을 찾는다!

∴ 그 결과 구해진 $\theta$로 이루어진 분포가 우리가 주어진 x data로 이루어진 원하는 분포.

(모수 $\theta$가 두 종류 이상으로 이루어져 있다면, 각 종류별로 미분한 결과(즉 각각 편미분)를 0으로 두어 해당 모수 값을 구하면 된다)

* w/logistic regression?>

Logistic Regression Model (concepts)

** ML 개요 포스팅에서 다룬 'supervised learning'은 아래와 같은 절차를 따른다고 했다 (↓↓↓↓하단 포스팅 참조 ↓↓↓↓) ML Supervised Learning → Regression → Linear Regression 1. ML 기법 구분 💆..

sh-avid-learner.tistory.com

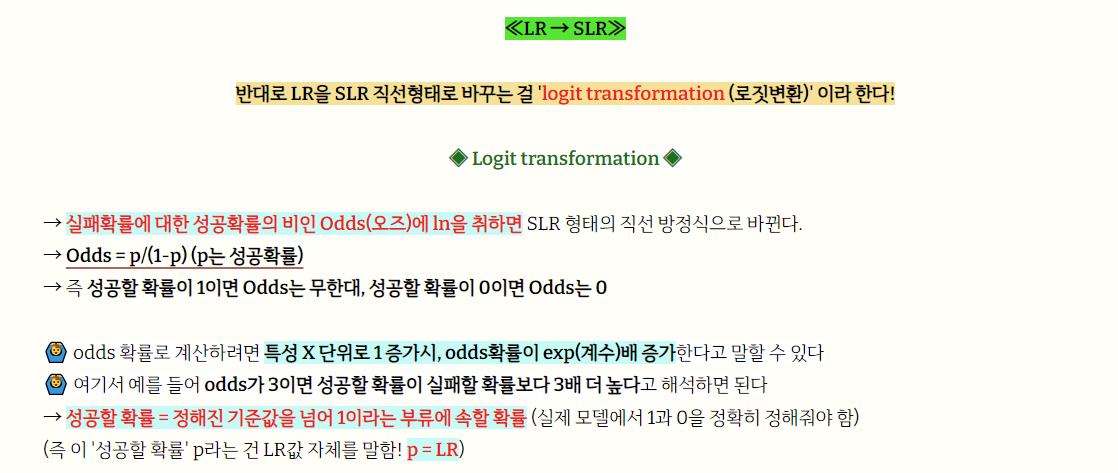

🌟 일명 '오즈비'로 불린 odds는 확률로 0이상 1이하의 값을 가지나, 시그모이드 함수 형태로 곡선을 보이기에, 우리는 '오즈비'에 로그를 취해 직선 함수 형태로 바꿔주었다.

🌟 직선으로 바뀌었지만, ln 로그를 취함으로써 y값의 range가 $-\infty$ ~ $+\infty$의 범위를 보여 OLS -least squares method로 주어진 data와 직선간의 거리 제곱합의 방법을 이용해 최적의 fitting line을 찾을 수가 없다. (OLS를 logisitc에서 사용할 수 없는 이유)

🌟 따라서 최적의 logistic model을 찾기 위한 방법으로 MLE(Maximum Likelihood Estimaiton) 기법을 택함. 주어진 x data를 이용해 data를 가장 잘 설명하는 logistic model을 찾아보자.

🎈 MLE의 likelihood는 Odds에서의 p 성공확률을 뜻한다.

🎈 따라서 우리는 임의의 LR 직선을 세우고, logit transformation한 다음, 각 data별 p 성공확률(likelihood)을 모두 구해야 한다.

(여기서 1이 아닌 0으로 class가 분류되는 data는 (1- SLR 곡선 값)이 likelihood이다.)

🎈 《logistic model selection 절차》

① $\theta^T = [\theta_0, \theta_1, ... , \theta_n]$를 모수로 갖고 있고, 두 class(A, B)로 분류된 LR을 먼저 식으로 나타내면

$P(y_i = A | x_i;\hat{\theta}) = \theta^T \bar{x}$

$\theta^T = [\theta_0, \theta_1, ... \theta_n], \bar{x} = [1, x_0, x_1, ... , x_n]^T$

② likelihood를 구하기 위해, [0, 1] range로 구성된 sigmoid 곡선으로의 변환 - logit transformation 수행

$h(x) = \sigma(\theta^{T}\bar{x}) = \cfrac{1}{(1+e^{-\theta^{T}\bar{x}})}$

→ 즉, likelihood로 나타내면 $P(y_i = A | x_i;\hat{\theta}) = \cfrac{1}{(1+e^{-\theta^{T}\bar{x}})}$

→ class B의 경우 likelihood는 $P(y_i = B | x_i;\hat{\theta}) = 1 - \cfrac{1}{(1+e^{-\theta^{T}\bar{x}})}$

③ binary problem과 같이, 두 class A와 class B의 각 likelihood를 합치면 (bernoulli distribution에 의해 - 곧 포스팅)

$P(y_i | x_i;\hat{\theta}) = h(x_i)^{y_i} (1-h(x_i))^{1-y_i}$

→ 개별 $y_i$가 아닌, 전체 y로 나타내자면

$P(y|x;\theta)$ = $\prod_{i=1}^{m} h(x_i)^{y_i} (1-h(x_i))^{1-y_i}$ = likelihood of $\theta$ = $L(\theta)$

(각 data의 likelihood를 모두 곱한다는 부분에서는 각 data의 독립성이 전제됨)

④ maximing $L(\theta)$ - 로그 취하기

$L(\theta)$ = $\sum_{i=1}^{m} y_i log(\sigma(\theta^{T}\bar{x_i})) + ... + (1-y_i)log((1-\sigma(\theta^{T}\bar{x_i})))$

⑤ $\theta$에 대해 편미분하기

$\cfrac{\partial L(\theta)}{\partial \theta}$ = $\cfrac{y}{\sigma(\theta^{T}\bar{x_i})}$$\cfrac{\partial \sigma(\theta^{T}\bar{x_i})}{\partial \theta}$ + ... + $\cfrac{1 - y}{1 - \sigma(\theta^{T}\bar{x_i})}$$(-1)\cfrac{\partial \sigma(\theta^{T}\bar{x_i})}{\partial \theta}$

+ 여기서 우리는 $\cfrac{\partial \sigma(\theta^{T}\bar{x_i})}{\partial \theta}$를 아래와 같이 분해할 수 있고

$\cfrac{\partial \sigma(\theta^{T}\bar{x_i})}{\partial \theta}$ = $\cfrac{\partial \sigma(\theta^{T}\bar{x_i})}{\partial (\theta^T\bar{x})}$$\cfrac{\partial (\theta^T\bar{x})}{\partial \theta}$

+ 쉽게 미분되는 sigmoid 성질을 이용해 다시 풀어쓰면

$\cfrac{\partial \sigma(\theta^{T}\bar{x_i})}{\partial (\theta^T\bar{x})}$$\cfrac{\partial (\theta^T\bar{x})}{\partial \theta}$ = $\sigma(\theta^{T}\bar{x_i})$$(1-\sigma(\theta^T\bar{x}))$$\bar{x}$

+ 풀어 쓴 위 항을 본 식에 대입하면

$\cfrac{\partial L(\theta)}{\partial \theta}$ = $(y - \sigma(\theta^T\bar{x})\bar{x}$을 최종적으로 얻는다.

⑥ GD(Gradient Descent) 개념을 적용해 $\theta$에 대입하면

$\theta^+$ = $\theta^{-}$ + $\alpha\cfrac{\partial L(\theta)}{\partial \theta}$

⑦ 위 주어진 GD식을 통해 최적의 $\theta$를 구하면 된다.

⑧ 구한 최적의 $\theta$로 구성된 최적의 logistic model - sigmoid function을 구하면 된다.

- 끝! -

🙌🏻 위 수식 진행과정처럼 완전히 똑같은 과정으로 MLE 기법을 normal distribution에도 적용할 수 있다. MLE 기법을 통해 주어진 x data에 가장 잘 맞는 normal distribution의 모수 $\mu$와 $\sigma$를 알아보는 과정을 수학적으로 풀어보자. (추후 포스팅)

* 썸넬 출처) https://programmathically.com/maximum-likelihood-estimation/

* 출처1) 최대우도법 개념 설명 https://www.youtube.com/watch?v=XhlfVtGb19c

* 출처2) MLE 기법 문헌 http://www.sherrytowers.com/mle_introduction.pdf

* 출처3) using MLE - logistic regression https://www.youtube.com/watch?v=TM1lijyQnaI

* 출처4) 갓 STATQUEST - MLE w/ logistic regression https://www.youtube.com/watch?v=BfKanl1aSG0

* 출처5) 갓 STATQUEST - MLE explained https://www.youtube.com/watch?v=XepXtl9YKwc

'Statistics > Concepts(+codes)' 카테고리의 다른 글

| MLE for the normal distribution (1) | 2022.06.27 |

|---|---|

| Auto-correlation + Durbin-Watson test (0) | 2022.06.17 |

| Bayesian Theorem (0) | 2022.05.07 |

| distribution》poisson distribution (포아송분포) (0) | 2022.05.06 |

| distribution》binomial distribution (이항분포) (0) | 2022.05.06 |

댓글